Introduction

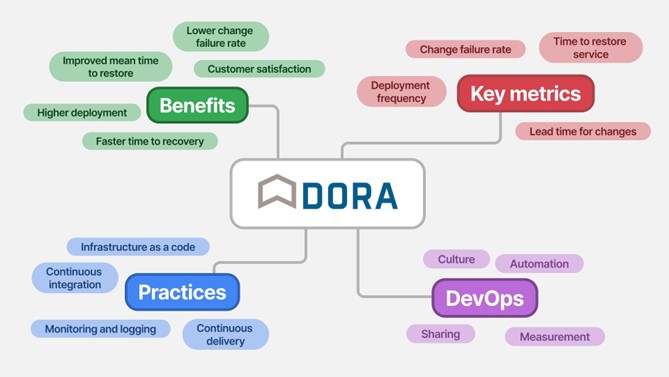

The DevOps Research and Assessment (DORA) work extends far beyond the State of DevOps reports, encompassing various research projects aimed at uncovering the scientific underpinnings of software development and operations excellence. Even though the study is 10 years old, it is often misunderstood, and its value remains underutilised by many companies. Understanding the data and the context provides the opportunity to use this powerful framework for improving practices, fostering DevOps culture and modernising the software development lifecycle.

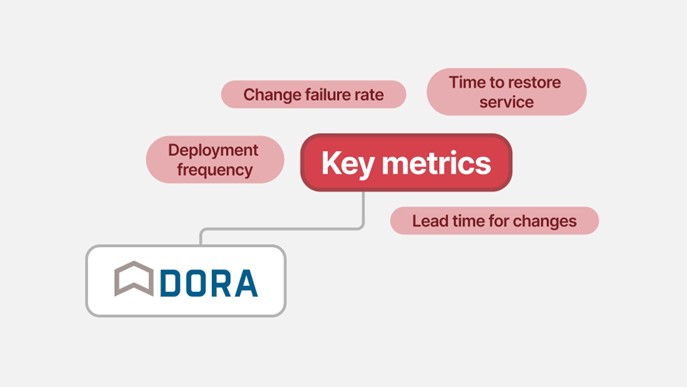

DORA has conducted rigorous statistical research to identify four key metrics essential for improving financial results and enhancing software delivery performance.

These metrics serve as benchmarks for organisations striving to optimise their development pipelines and drive better business outcomes. By tracking and improving these metrics, teams can assess their performance levels and pinpoint areas for enhancement.

Even though the concept is not new it is still not broadly understood and adopted by many companies.

Why is DORA important?

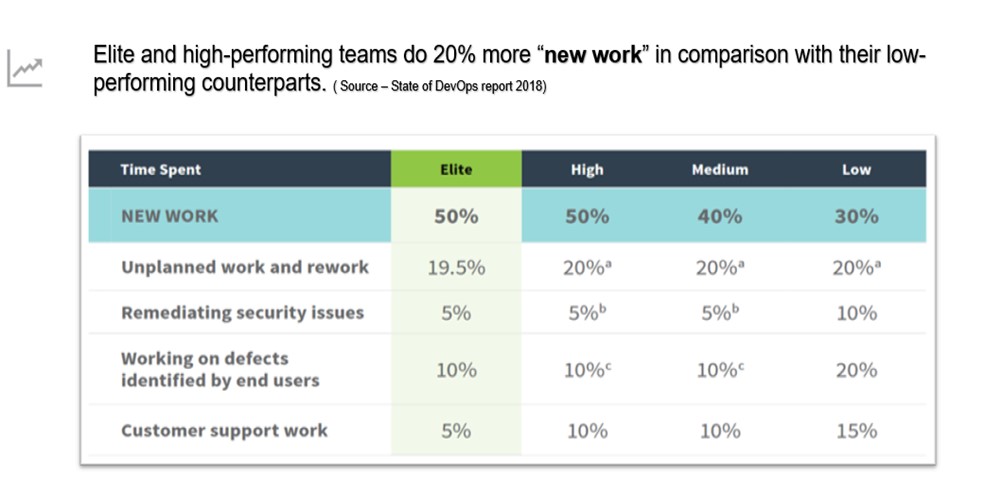

As revealed by DORA metrics, elite and high-performing teams enjoy 20% more time for new work. This underscores the importance of tracking and acting upon DORA metrics to achieve tangible improvements in software delivery capability and operational performance.

Implementing DORA at Sabre

As a leading technology company, Sabre recognised the importance of standardising metric collection across diverse teams. The goal was to develop an easy-to-use self-service platform that would empower teams to measure metrics automatically and facilitate continuous improvement.

Challenges and Solutions

Sabre encountered several challenges in standardising metric collection, including issues with data normalisation and metric accuracy. While collecting data for three metrics (change failure rate, mean time to restore, and deployment frequency) proved relatively straightforward, obtaining data for lead time posed a challenge. To tackle these challenges, Sabre appointed a technical working group and fostered community engagement among DORA enthusiasts. By building a culture of collaboration and continuous improvement, Sabre overcame these hurdles and successfully built a self-service for tracking DORA metrics.

Lead Time challenge

In the realm of software engineering, understanding and optimising team performance is paramount. One of the key metrics used for this purpose is Lead Time, which signifies the median duration from commit to deployment into production. Nevertheless, accurately calculating Lead Time can pose a challenge due to disparate data sources and varying team workflows. To tackle this challenge, the technical working group at Sabre defined a standardised approach and documented it in the so-called Architecture Design Record, ensuring that all software engineering teams in a distributed environment understand the opportunities and consequences.

Traditionally, Lead Time relies on commit data (sourced) from Git repositories. However, automatic data extraction from Git can be error-prone, and commit data may change over time due to user actions.

To mitigate these challenges, it is worth to

- Include commits data from the repository you are using in Change Requests by extending the description field of change requests with a JSON string containing references to commits. This ensures that commit data is directly linked to each change request, providing a single source of truth.

- Standardise Data Format: Define a structured format for the JSON string, including commit SHA, date, author (optional), and message (optional). This standardised format simplifies the extraction and calculation process.

- Automate Data Extraction: Implement tools to automate the extraction of commit data and populate change request descriptions. This reduces manual effort and ensures data accuracy.

Example:

Once the commit data is gathered, the Lead Time metric can be calculated efficiently. Here’s how:

- Commits Included in Calculation: For each commit on the main branch, measure the time elapsed since the commit was made until it was deployed to the production environment.

- Metric Calculation Algorithm: Use a structured algorithm to calculate the Lead Time for each commit. This algorithm considers the deployment date of the associated change request and computes the median Lead Time.

Example of the algorithm:

After calculating Lead Time, categorise the results into predefined buckets based on time intervals (e.g., one day, one week, one month). This provides a clear understanding of deployment efficiency across various timeframes.

In conclusion, by adopting this standardised approach for measuring Lead Time, software engineering teams can attain deeper insights into their deployment processes, identify areas for improvement, and ultimately enhance overall performance. By leveraging automation, standardisation, and platform support, organisations can streamline data collection and analysis, thus paving the way for continuous optimisation and innovation.

Platform Engineering Support

To support this standardised approach, it’s essential to provide platform-level support and consider the implications:

- Maintaining the tool to facilitate tasks such as retrieving the latest commit SHA, calculating commits locally, scheduling orchestration, and reporting commits enhancesautomation and streamlines workflows.

- Support CI Pipeline Integration: Incorporate commits list population into the CI pipeline, ensuring that teams add commit data to change requests consistently.

- Maintaining Dashboard Tool/UI: Integrate with DORA dashboard tools (e.g., QlikSense, Sleuth) to enable direct Lead Time calculation from commit data. This ensures seamless reporting and analysis for all applications adhering to the recommended convention.

I have metrics… what next?

Currently, we are working on understanding the data, identifying areas for improvement, and finally monetising the outcomes. It will be much harder without the DORA framework and the standardisation it delivers. People are starting to observe the metric, make low-level improvements, and assess the impact.

It took the form of gamification where people can easily understand the impact of the technical optimization they are making. We wanted to build an offer for innovative, ambitious teams striving for greater productivity and continuous improvement.

Conclusion

In conclusion, DORA metrics serve as a powerful tool for organisations seeking to optimise their software development and delivery processes. By measuring, understanding, and improving these metrics, organisations can unlock their full potential and drive better outcomes for both their teams and customers. Through continuous measurement and improvement, organisations can systematically enhance their DevOps practices, paving greater success in today’s competitive landscape.