This article is the final of a three-part series on optimizing airline ancillary bundles based on customer preferences. In Part 1, we discussed data collection and customer segmentation methods. In Part 2, we met traveler Caroline and learned how recommendation and offer engines were used to present her with a set of personalized offers from which she found a desirable bundle for an anniversary trip to Spain with her boyfriend.

In this section, we will cover the use of experimentation engines to provide a “test-and-learn” framework for ancillary bundles that will further improve our marketing effectiveness, ensuring that travelers receive an offer that is both tailored to their preferences and priced competitively.

Experimentation Engine

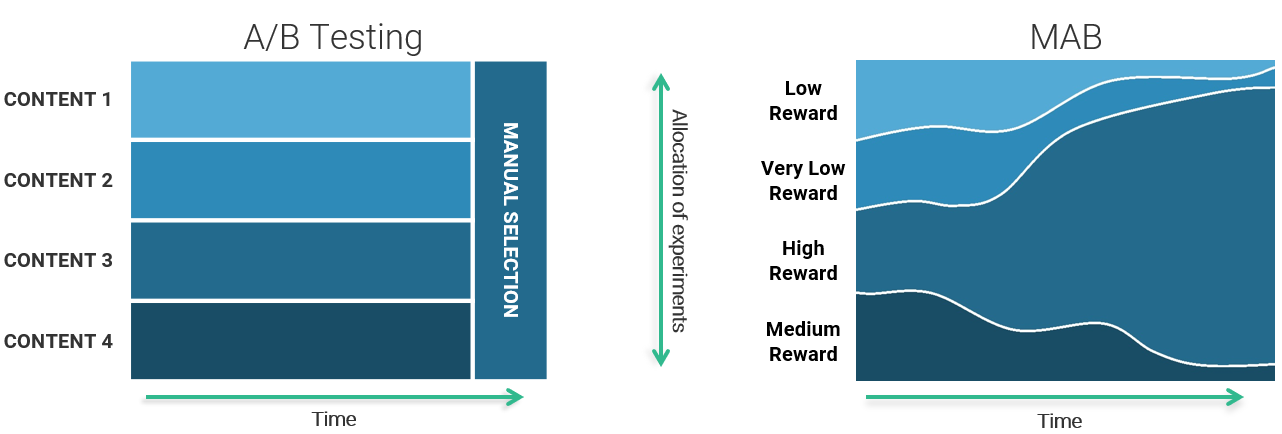

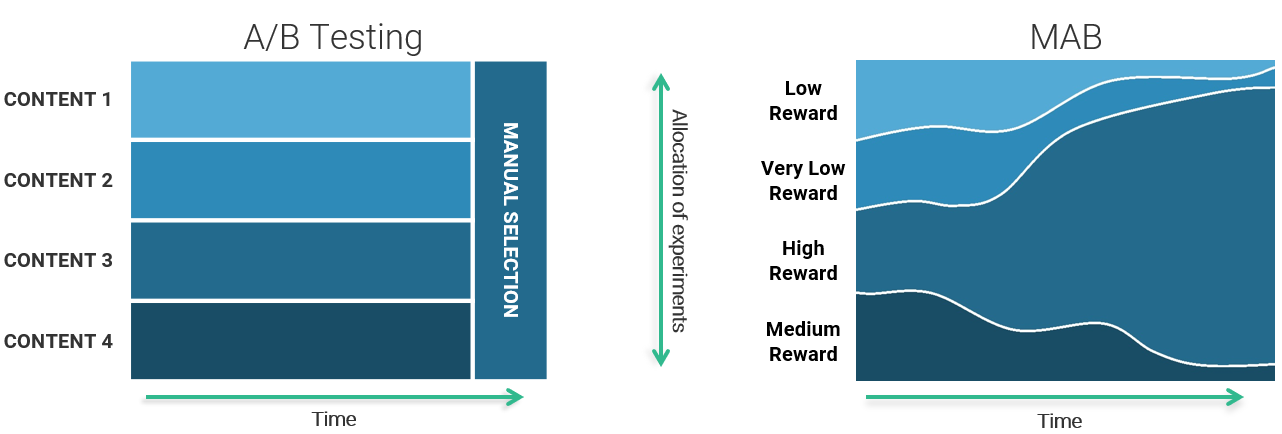

Consumer expectations and market conditions change, so it is useful to have self-learning tools which can automatically adapt to evolving circumstances. Reinforcement learning techniques are often used for this purpose – this means that the artificial intelligence is put into a game-like situation in which it receives rewards (or penalties) for certain actions. Specifically, Sabre Labs has seen good success using multi-armed bandit (MAB) experimentation methods. Stated very simply, this is an alternative to the classic A/B testing methods where the AI continuously re-focuses its efforts on actions that yield a positive outcome. The name comes from a casino slot machine into which the gambler drops a coin, pulls a lever (or arm), and receives a payout (or not). In a multi-armed bandit methodology, the gambler plays several slot machines simultaneously – there are multiple “levers,” each yielding a different likelihood for success, and using machine learning we can narrow down which levers deliver the highest rewards.

Experimentation methods with multi-armed bandit have a variety of different business uses. First, it can be used as part of an automated framework; an example is Sabre’s recommender system which automatically assigns experiments, observes the outcomes and uses the results to improve the quality of its model-generated recommendations over time. Second, retailers may decide to run one-time experiments to help answer business questions such as:

Adapted from: Frosmo, Multi-Armed Bandit by Joni Turunen, 2017

Other Applications

We will discuss two noteworthy tactics in this section: post-booking up-/cross-sell campaigns and a form of dynamic pricing known as “market adaptive pricing.”

An alternative to providing real-time offers in the initial sales session is to have the customer make an initial booking and then follow-up via email with upsell offers that have been vetted for sales effectiveness through a MAB method. So, Caroline’s booking might trigger an email offering her the chance to name the price she is willing to pay to upgrade her seats to a higher class (because this is the offer travelers comparable to hers have purchased most frequently in the past).

A related (and hugely exciting!) opportunity to improve offer management solutions is market-adaptive dynamic pricing. These systems work using streaming (or real-time) data sources with information on current market conditions (e.g. Sabre lowfare search shopping data) combined with customer choice models to monitor and optimize competitive positioning on an ongoing basis. Commercial tools considering traditional airfare price and schedules in computing item attractiveness already exist (e.g. Sabre Dynamic Availability). However, there is significant opportunity to extend these tools to also consider ancillary item attractiveness and price to further personalize the experience and provide more competitive offers. Research in this area is ongoing and will be especially important as new distribution standards (such as IATA NDC) become more widely adopted.

Conclusions

A key to customer centricity is the ability to offer the right product or bundle to the right customer at the right price at the right time, based on both stated and revealed customer preferences. Understanding these preferences and generating pertinent targeted responses to customer requests during the sales-process lifecycle is the new reality. To generate targeted offers that truly resonate with customers requires a significant investment in data infrastructure and advanced analytics. We are confident, however, that the opportunity is at least equally significant as these data enable a better understanding of consumer behavior and preferences and will generate increases in incremental revenue through targeted offers that ensure repeat, profitable customers.

Adapted from: Frosmo, Multi-Armed Bandit by Joni Turunen, 2017

Other Applications

We will discuss two noteworthy tactics in this section: post-booking up-/cross-sell campaigns and a form of dynamic pricing known as “market adaptive pricing.”

An alternative to providing real-time offers in the initial sales session is to have the customer make an initial booking and then follow-up via email with upsell offers that have been vetted for sales effectiveness through a MAB method. So, Caroline’s booking might trigger an email offering her the chance to name the price she is willing to pay to upgrade her seats to a higher class (because this is the offer travelers comparable to hers have purchased most frequently in the past).

A related (and hugely exciting!) opportunity to improve offer management solutions is market-adaptive dynamic pricing. These systems work using streaming (or real-time) data sources with information on current market conditions (e.g. Sabre lowfare search shopping data) combined with customer choice models to monitor and optimize competitive positioning on an ongoing basis. Commercial tools considering traditional airfare price and schedules in computing item attractiveness already exist (e.g. Sabre Dynamic Availability). However, there is significant opportunity to extend these tools to also consider ancillary item attractiveness and price to further personalize the experience and provide more competitive offers. Research in this area is ongoing and will be especially important as new distribution standards (such as IATA NDC) become more widely adopted.

Conclusions

A key to customer centricity is the ability to offer the right product or bundle to the right customer at the right price at the right time, based on both stated and revealed customer preferences. Understanding these preferences and generating pertinent targeted responses to customer requests during the sales-process lifecycle is the new reality. To generate targeted offers that truly resonate with customers requires a significant investment in data infrastructure and advanced analytics. We are confident, however, that the opportunity is at least equally significant as these data enable a better understanding of consumer behavior and preferences and will generate increases in incremental revenue through targeted offers that ensure repeat, profitable customers.

- “What is the best price for a product in this market?”

- “What text description of my product works best for certain customer types or selling channels?”

- “What product images or color schemes provide the highest conversation rates for these items and customer types?”

Adapted from: Frosmo, Multi-Armed Bandit by Joni Turunen, 2017

Other Applications

We will discuss two noteworthy tactics in this section: post-booking up-/cross-sell campaigns and a form of dynamic pricing known as “market adaptive pricing.”

An alternative to providing real-time offers in the initial sales session is to have the customer make an initial booking and then follow-up via email with upsell offers that have been vetted for sales effectiveness through a MAB method. So, Caroline’s booking might trigger an email offering her the chance to name the price she is willing to pay to upgrade her seats to a higher class (because this is the offer travelers comparable to hers have purchased most frequently in the past).

A related (and hugely exciting!) opportunity to improve offer management solutions is market-adaptive dynamic pricing. These systems work using streaming (or real-time) data sources with information on current market conditions (e.g. Sabre lowfare search shopping data) combined with customer choice models to monitor and optimize competitive positioning on an ongoing basis. Commercial tools considering traditional airfare price and schedules in computing item attractiveness already exist (e.g. Sabre Dynamic Availability). However, there is significant opportunity to extend these tools to also consider ancillary item attractiveness and price to further personalize the experience and provide more competitive offers. Research in this area is ongoing and will be especially important as new distribution standards (such as IATA NDC) become more widely adopted.

Conclusions

A key to customer centricity is the ability to offer the right product or bundle to the right customer at the right price at the right time, based on both stated and revealed customer preferences. Understanding these preferences and generating pertinent targeted responses to customer requests during the sales-process lifecycle is the new reality. To generate targeted offers that truly resonate with customers requires a significant investment in data infrastructure and advanced analytics. We are confident, however, that the opportunity is at least equally significant as these data enable a better understanding of consumer behavior and preferences and will generate increases in incremental revenue through targeted offers that ensure repeat, profitable customers.

Adapted from: Frosmo, Multi-Armed Bandit by Joni Turunen, 2017

Other Applications

We will discuss two noteworthy tactics in this section: post-booking up-/cross-sell campaigns and a form of dynamic pricing known as “market adaptive pricing.”

An alternative to providing real-time offers in the initial sales session is to have the customer make an initial booking and then follow-up via email with upsell offers that have been vetted for sales effectiveness through a MAB method. So, Caroline’s booking might trigger an email offering her the chance to name the price she is willing to pay to upgrade her seats to a higher class (because this is the offer travelers comparable to hers have purchased most frequently in the past).

A related (and hugely exciting!) opportunity to improve offer management solutions is market-adaptive dynamic pricing. These systems work using streaming (or real-time) data sources with information on current market conditions (e.g. Sabre lowfare search shopping data) combined with customer choice models to monitor and optimize competitive positioning on an ongoing basis. Commercial tools considering traditional airfare price and schedules in computing item attractiveness already exist (e.g. Sabre Dynamic Availability). However, there is significant opportunity to extend these tools to also consider ancillary item attractiveness and price to further personalize the experience and provide more competitive offers. Research in this area is ongoing and will be especially important as new distribution standards (such as IATA NDC) become more widely adopted.

Conclusions

A key to customer centricity is the ability to offer the right product or bundle to the right customer at the right price at the right time, based on both stated and revealed customer preferences. Understanding these preferences and generating pertinent targeted responses to customer requests during the sales-process lifecycle is the new reality. To generate targeted offers that truly resonate with customers requires a significant investment in data infrastructure and advanced analytics. We are confident, however, that the opportunity is at least equally significant as these data enable a better understanding of consumer behavior and preferences and will generate increases in incremental revenue through targeted offers that ensure repeat, profitable customers.